Serverless architecture fundamentally changes security. Sometimes for the better. Sometimes for the worse. Sometimes somewhere in the middle. To keep data and applications secure in our increasingly serverless world, startups and enterprises of all sizes need to understand what’s different, why it matters, and what they should do to protect themselves.

In this article, we provide some background on serverless architecture and take a look at one expert’s perspective on its implications for security and the best way to navigate these changes.

What Is Serverless Architecture?

Serverless architectures are cloud-based and service-based. This means that, instead of provisioning and managing their own servers, organizations buy cloud computing services such as the following:

- Software as a Service, or SaaS, delivers centrally hosted and managed software on demand to end users over the internet (e.g., Gmail, Slack, Jira, SAP, Salesforce). These are often cloud-native applications.

- Infrastructure as a Service, or IaaS, delivers resources such as networks, data storage, and computers which must be managed by the end users.

- Platform as a Service, or PaaS, builds on IaaS by allowing users to deploy and run applications on managed platforms.

- Function as a Service, or FaaS, is a specialized type of PaaS that allows users to develop, manage, and run application functions on managed platforms (e.g., AWS Lambda).

What do businesses get from all these “as a service” offerings? An astonishingly wide range of capabilities, including AI and machine learning, API management, application integration, AR/VR, blockchain, business applications, computing power, content delivery, data analytics, databases, cloud-native development, migration, networking, robotics, storage, and countless other areas.

Leading Cloud Providers

- Amazon Web Services, or AWS, offers a huge set of tools with mighty, continually improved capabilities that have yet to be matched. AWS is the clear leader in terms of market share.

- Google Cloud Platform, or GCP, provides a growing list of services distinguished by their levels of security and foundation in technical expertise.

- Microsoft Azure is often favored by enterprises given its ability to integrate with existing organizational systems and processes. It’s also one of the fastest cloud solutions.

Why the Shift to Serverless Computing?

Serverless computing brings distinct benefits: Easier, faster deployment. Increased flexibility, scalability, latency, accessibility, and innovation, helping to expedite and improve collaboration and cloud-native development. Automatic software updates. No more worries about server-level security. Reduced costs, given you’re effectively outsourcing all the duties required to manage and secure your own servers. With serverless, all services are “pay as you go.” The infrastructure is there if you need it, but you don’t pay for what you don’t use

Given these obvious benefits, businesses of all sizes and across industries have been quick to embrace serverless. RightScale’s 2019 “State of the Cloud” report from Flexera found that 84% of enterprises have a multi-cloud strategy, and public cloud spend has reached $1.2M annually for half of enterprises. Gartner’s April 2018 report, “An I&O Leader’s Guide to Serverless Computing,” predicted that more than 20% of enterprises globally will have deployed serverless computing technologies by 2020.

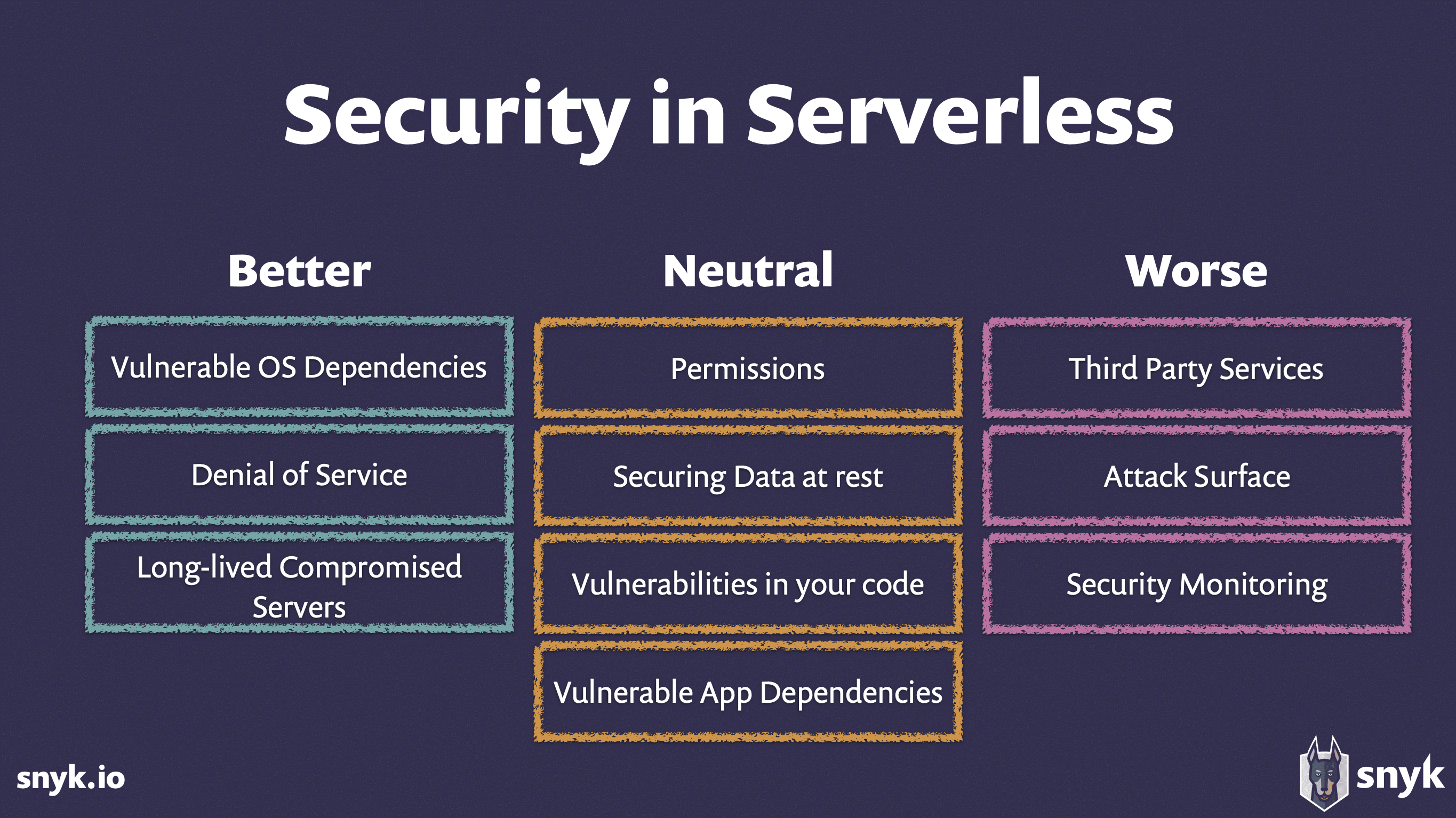

When Is Serverless BETTER for Security?

In his talk at O’Reilly’s 2017 Velocity Conference, “Serverless Security: What’s Left to Protect?”, Guy Podjarny, security expert and Co-founder of open source security platform Snyk, effectively boiled down some key insights. We draw on his perspective and recommendations in the next few sections. (Podjarny’s full talk can be found here.)

As Podjarny explains, serverless offers security benefits worth celebrating. You no longer have to worry about:

- Vulnerable operating system (OS) dependencies, or when a problem in one server infects all your other servers. Remember “Heartbleed”? These severe, highly prevalent vulnerabilities are scary for businesses. With serverless, there’s no longer any need to “patch” your servers.

- Denial of Service (DoS), which is what happens when a stream of heavy requests from one customer takes down your servers — leaving you unable to serve other customers. Since serverless auto-scales, capacity flexes to meet demand. But there are caveats. Specifically:

- Serverless platforms do enforce some limits (e.g., AWS Lambda’s default limit is 1,000 concurrent functions per region).

- Your bill flexes, too: If you’re experiencing extra traffic, you’ll pay for it.

- Since serverless is not pipeless, you’re still vulnerable to DDoS floods of data.

- Long-lived compromised servers like the one that led to Equifax’s severe 2017 data breach. Serverless creates a state… of statelessness. Even a compromised server doesn’t live long. This means attackers have to repeat their attacks many times in shorter time windows, increasing their risk of detection and reducing their chances of success. This one has a caveat, too, however:

- Serverless isn’t entirely stateless. Containers are often reused for the same functions. An attacker can modify files on a function, and the next user to invoke it gets the attacker’s compromised code. (In this area, FaaS is better than PaaS, because PaaS’ servers are long-lived.)

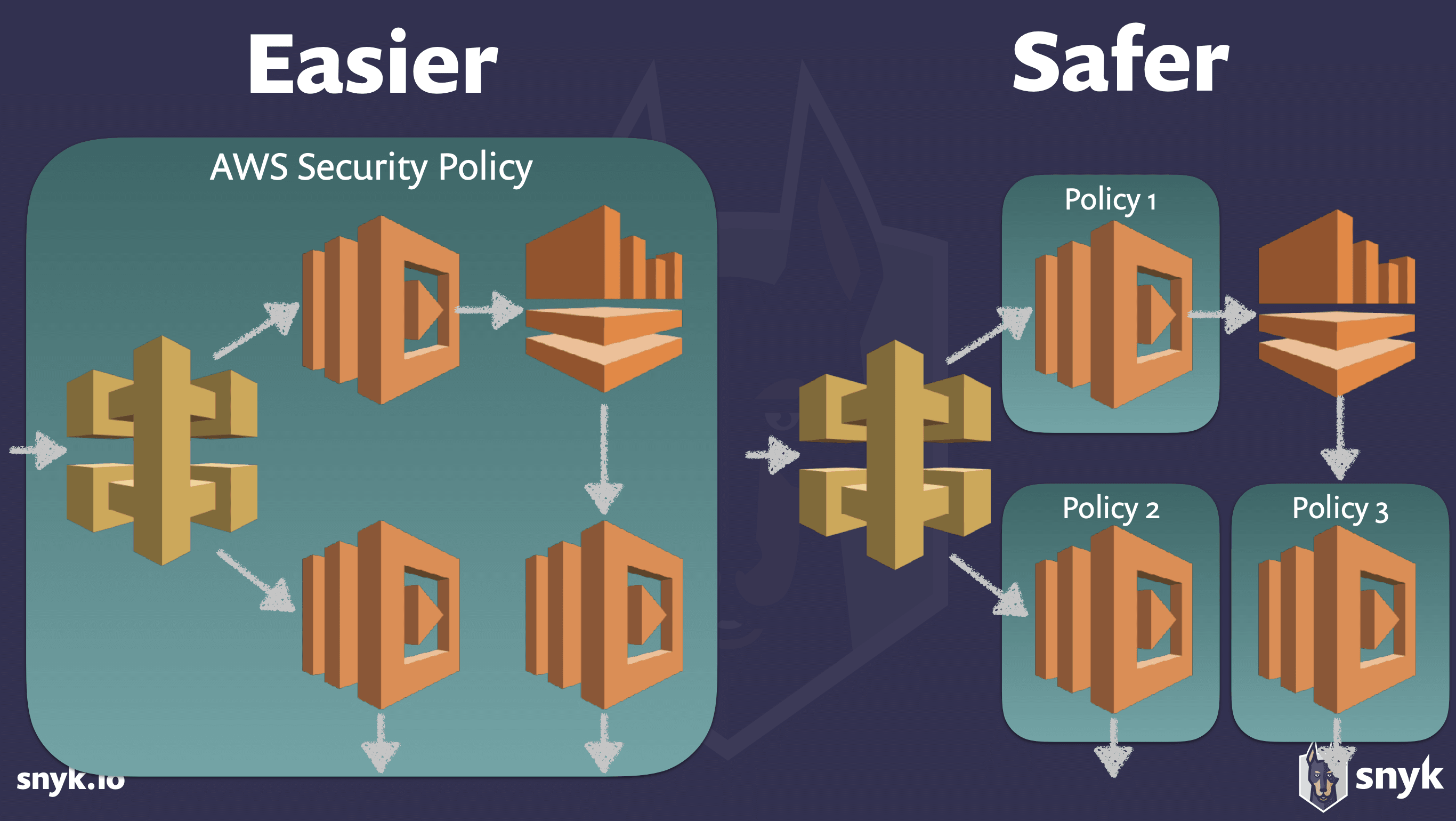

When Is Serverless NEUTRAL for Security?

Serverless has neither removed certain security issues nor significantly worsened them. That’s what Podjarny means when he refers to them as “neutral.” These are things you still need to worry about.

That said, “neutral” can mean “worse” in some cases. Because serverless has denied attackers access in some areas, they’ve shifted attention to the vulnerabilities that remain. That includes some of these theoretically “neutral” areas.

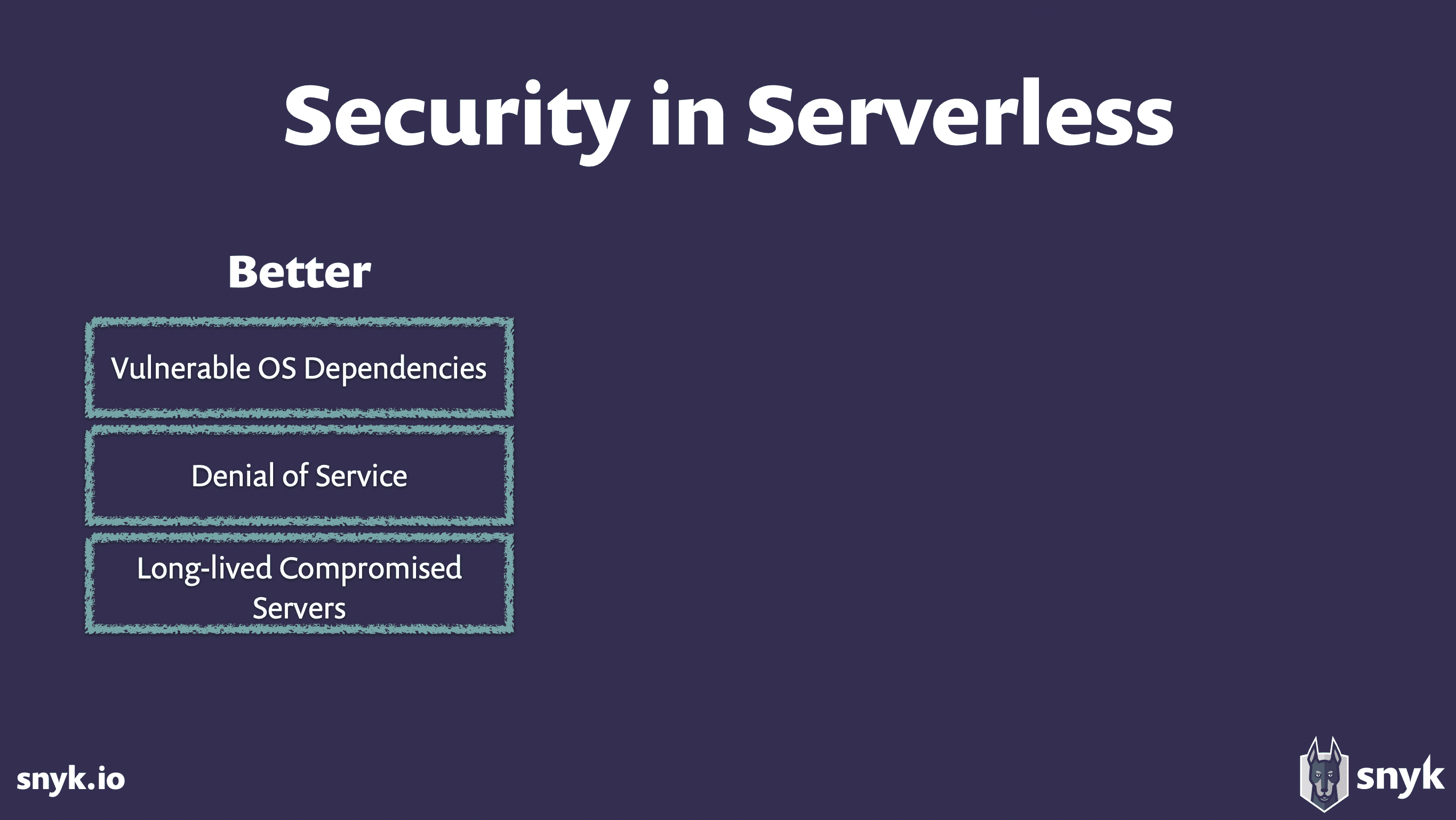

Permissions and Authorizations

Why You Still Need to Worry

Serverless actually offers improved capabilities in this area. You’re now able to set permission models on smaller units of code — on a function. But we haven’t yet figured out how to fully capitalize on this. Also, when deploying several functions, it’s easiest to default to the maximum permission levels allowed for any one function in that stack. So, generally speaking, functions end up with less secure permissions.

As a result, you’ve now got several questions to answer: Who can invoke your function? Who can access its code and environmental variables? When you have a sequence of functions and one is compromised, what happens? Can the compromised function access sensitive data or compromise another function?

Tips and Tricks for Serverless Security

Podjarny advises being strict and deliberate from the outset:

- Get granular in your policies and permissions, and keep them that way. Think about functions individually, managing individual policies for each of them. Of course, this is difficult to do at scale. (Tools to automate the process are under development; more on that below.)

- Use the “least privilege” principle, giving each function the minimum permissions. As Podjarny explains, as functionality is added, the natural state of any policy is to continuously expand.

Securing Data at Rest

Why You Still Need to Worry

Nowadays, data is the holy grail of any security breach. And while your servers are now stateless, your application isn’t. FaaS apps still store data. That data can be stolen or tampered with.

Podjarny admits that serverless leans negative on this otherwise “neutral” concern. With serverless, you can’t store state (e.g., temporary tokens, passwords, session IDs) on the server, which means you store it outside the server.

Tips and Tricks for Serverless Security

Podjarny advises encryption, granularity, and control:

- Encrypt all sensitive persistent data.

- Encrypt all sensitive off-box state data.

- Minimize the functions that can access each data store.

- Use separate database credentials for each function and control what those credentials do (e.g., allowing access only to specific tables or data segments).

- Monitor which functions are accessing which data (e.g., using analysis tools like AWS X-Ray).

Vulnerabilities in Your Code

Why You Still Need to Worry

Serverless doesn’t protect the application layer. So concerns like SQL injection, cross site scripting, remote command execution, cross site request forgery, and bad authlogic — they’re still threats. And they now get more attention from attackers, since serverless security has removed other low-hanging fruit.

Tips and Tricks for Serverless Security

Podjarny recommends standard best practices topped with increased discipline and granularity:

- Begin by focusing on the OWASP Top 10 attack types.

- Employ testing to analyze your code, including both dynamic and static application security testing.

- Standardize input and output processing libraries to include sanitization.

- Use API gateway models/schemas as strictly as possible.

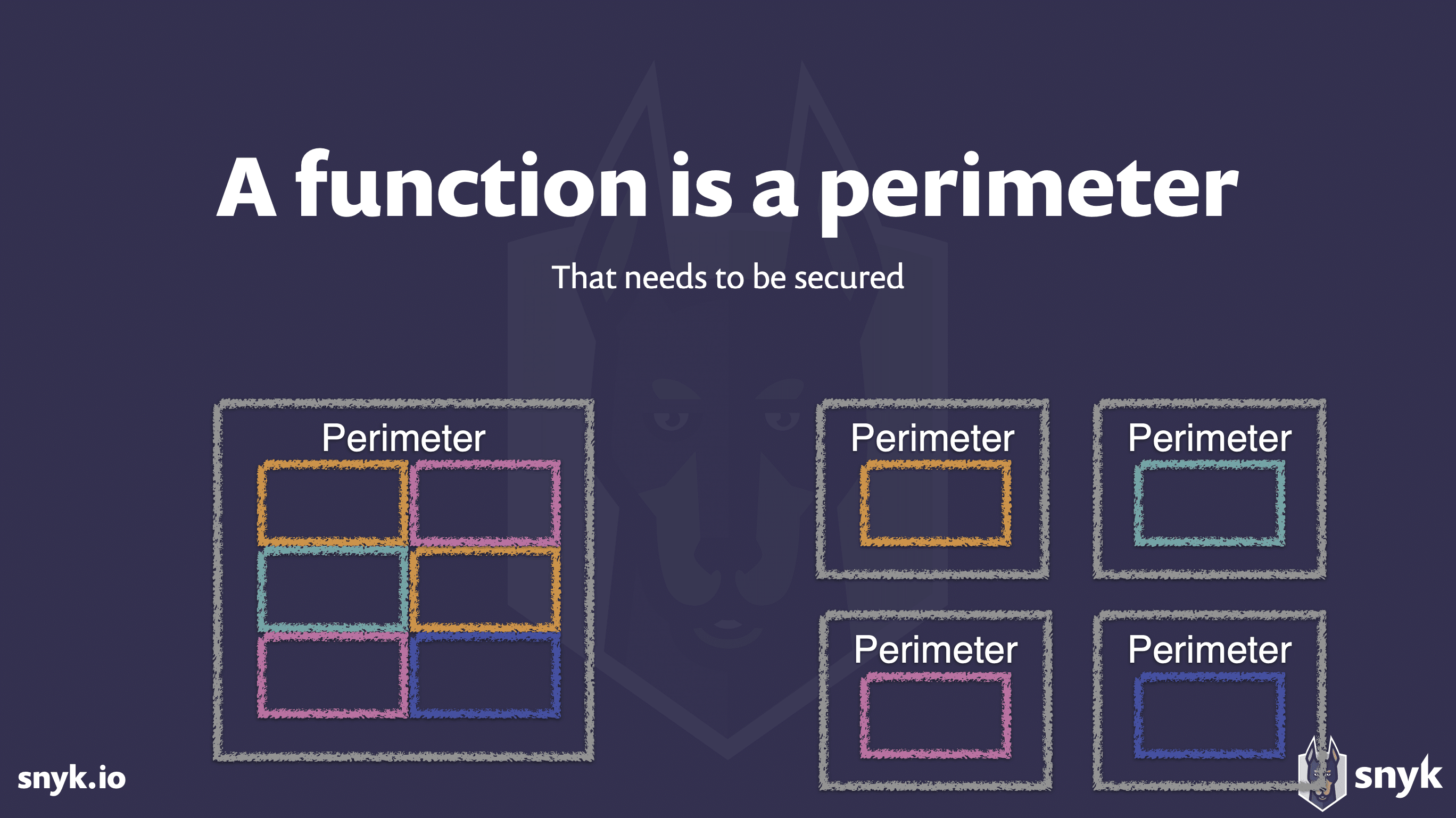

- Secure each function independently, setting up a perimeter around it. According to Podjarny, this is the most important strategy.

Vulnerable Application Dependencies

Why You Still Need to Worry

To minimize code, serverless functions often leverage application dependencies and third-party code libraries. Over time, these dependencies are zipped in and forgotten, becoming stale and vulnerable. More code = more vulnerabilities. This is exactly what happened for Equifax: A known bug in a dependent library was exploited.

Tips and Tricks for Serverless Security

Remember: The platform manages operating system dependencies, but you’re still responsible for managing application dependencies. Increasingly, there are tools and businesses available to help you identify and fix vulnerabilities, including Podjarny’s Snyk.

When Is Serverless WORSE for Security?

To be clear, serverless hasn’t created any NEW security problems. But it has meant we’re doing certain activities more frequently. Those activities have inherent risk. Podjarny outlines three concerns that, with serverless, you now have more need to worry about.

Third-party Services and Securing Data in Transit

Why You Still Need to Worry

With serverless, we’re increasingly inclined to use third-party services. They’re stateless, and we need them for a million different reasons. But those services are vulnerable, too. So you should consider:

- What data are you sharing? How well is the third-party service protecting that data?

- Is data in transit secured and/or encrypted? Is it in a VPC? Is it using HTTPS (the right solution, per Podjarny)?

- Who are you talking to? Can you validate the HTTPS certificate they’re using?

- Do you trust its responses? If the service is compromised, can it be used to compromise you?

- How do you store API keys? Though statelessness makes it tempting to store credentials in GitHub and other repositories, it’s better to use a KMS and environmental variables.

Tips and Tricks for Serverless Security

- Consider using security- and performance-monitoring tools. Since it’s a nascent space, new tools are emerging. Find something that works for your needs.

- Worry about your first-party services, too. Your least secure function could be responsible for taking down your system.

Attack Surfaces — aka “Mo’ Functions, Mo’ Problems”

Why You Still Need to Worry

Serverless allows greater granularity, which in turn means greater flexibility. Basically, you can now put your building blocks together in countless ways. While that flexibility is a positive, it also increases risk. Unanticipated consequences get multiplied very quickly.

Tips and Tricks for Serverless Security

Podjarny focuses on establishing perimeters and monitoring performance:

- Think about every function as a perimeter that needs to be secured. When you ask security questions, ask them first about the function. Ask them later about the application.

- Test every function independently for security flaws.

- Don’t rely on limiting access to a function, as access controls change over time.

- Consider your library model. Use shared input/output processing libraries, making it easier to process inputs securely.

- Limit functionality to what you actually need. Put in the effort to determine the minimum allowed for every function. Reduce to “least privilege.”

- Monitor both individual functions and full flows. Though this one’s aspirational, given the shortage of tools, you want to understand how data runs through your system and quickly detect atypical activity.

Security Monitoring

Why You Still Need to Worry

Serverless environments make it easier and cheaper than ever to deploy functions. Just like permissions, functions are easy to add and hard to remove. Since functions’ policies naturally expand and cost no longer drives removal, you end up with lots of functions — many of which are lightly used or poorly understood — with overly open policies. And though you’re no longer wrangling servers, you’re busy wrangling functions. (At least your functions are all registered on your cloud platform.)

Podjarny pinpoints this area as his biggest concern. No operational cost doesn’t equal no cost of ownership. Basically, the more functions you have, the more risk you have.

Tips and Tricks for Serverless Security

Podjarny advises being intentional and methodical about inventorying and managing functions:

- Consider before you deploy. Do you really need it?

- Separate networks and accounts for groups of functions. Put functions in buckets (e.g., chron jobs) so you know what is and isn’t disposable. Have different permissions.

- Track what you’ve deployed and how it’s used. Don’t just rely on the platform’s inventory.

- Minimize permissions up front.

- Reduce permissions chaos-style, and see what breaks.

- Monitor for known vulnerabilities.

Next Steps for Navigating Serverless Security

First off, if you haven’t already, you should adopt serverless. Alongside fairly incredible benefits of scalability, efficiency, and cost reduction, it dramatically removes or reduces several top security concerns.

Going serverless simply requires you to shuffle your security priorities. Attacks that used to be easy have become harder. But those attackers don’t give up. So, if you’ve already gone serverless, it’s time to:

- Worry about scaling the neutral risks. This involves being more granular and less permissive in your policies and perimeters, encrypting certain data, analyzing functions’ data access, and using testing and tools to find and fix vulnerabilities.

- Focus on the security risks that have become worse. This involves taking care in how you work with third-party services, performing independent security testing of functions, limiting functionality, considering library models, monitoring both functions and flows, reducing permissions, and reducing and tracking deployments of functions.

- Research and invest in serverless security scanners and testing services that fit your needs. This is a space that’s evolving by the second. New products come out regularly, but many of them are still new and somewhat unproven. Do your research and invest wisely.

Have questions about the security of your cloud? Let us know! Distillery’s cloud computing experts help enterprises of all sizes with cloud security, strategy, monitoring, management, and optimization.